Government officials see AI and technology as top reasons that fraud, waste, and abuse will increase, but they also recognize GenAI’s potential to help in the fraud detection fight, according to a recent survey

For those government officials responsible for disbursing public funds and safeguarding tax dollars, there is a new threat on the horizon: artificial intelligence (AI). Particularly with the rapidly emerging capabilities of generative AI (GenAI), risk professionals from all levels of government are grappling with a world in which criminals can more easily fake documents, develop deepfake audio and video, and even create all new identities — all with the aid of publicly-available AI tools.

At the same time, however, AI capabilities aren’t only available to the criminal underworld. Those same technologies are being used in applications aimed at detecting fraud, waste and abuse (FWA) within government programs, helping officials more quickly spot problem areas than they may find through manual processes. Just as GenAI can aid in deepfakes and the like, so too are technology developers creating solutions to identify those fakes and act upon them more quickly.

So where does that leave the government sector in regard to AI? According to the Thomson Reuters Institute’s 2024 Government Fraud, Waste & Abuse Report, it’s in a state of flux. Government officials are increasingly coming to recognize AI-driven technology’s potential for aiding their daily work, but at the same time, they’re wary of its impact on the future of crime.

The potential for bad actors

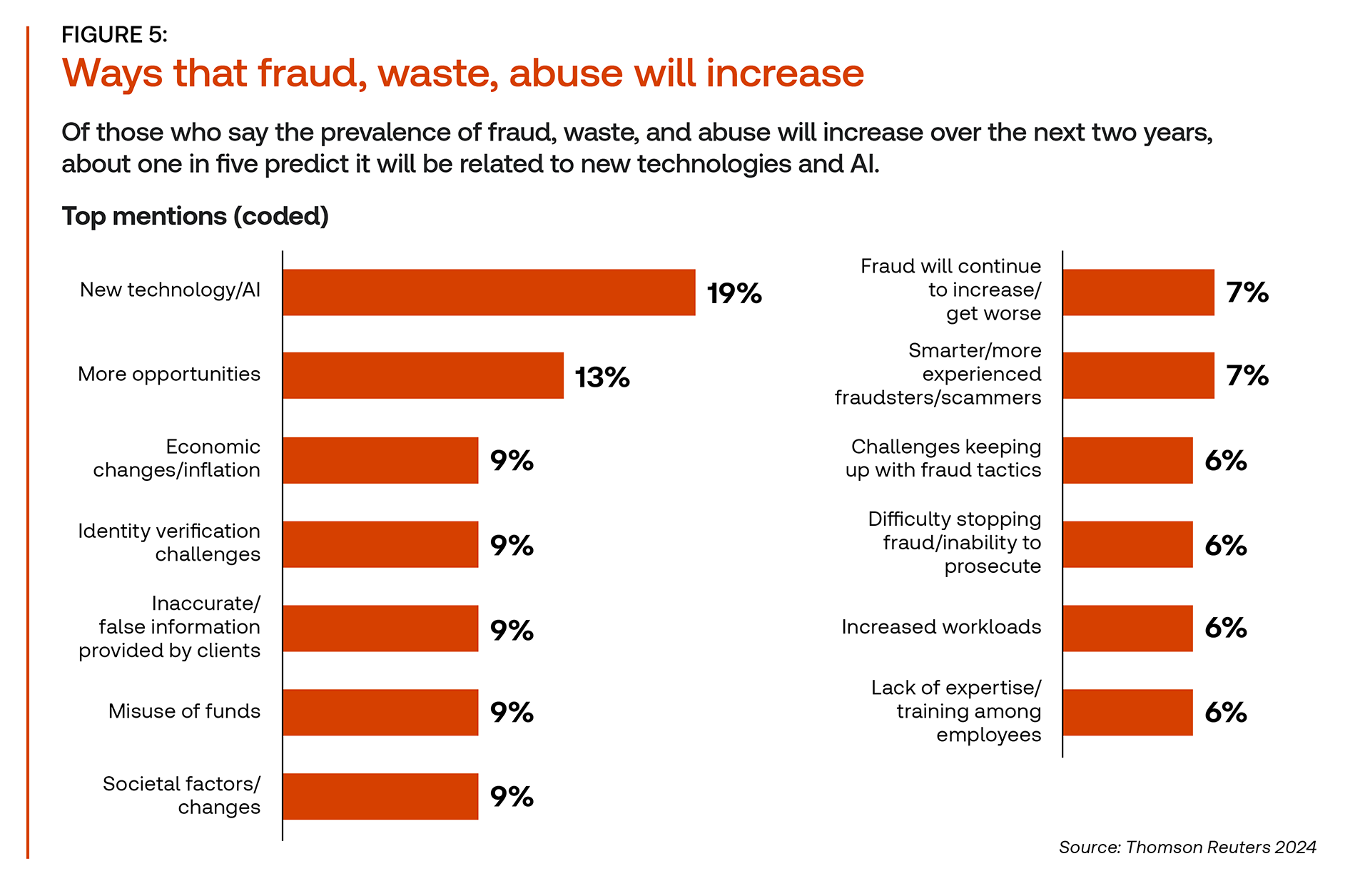

There are a number of rising avenues for crime in recent years, and many of them aren’t even necessarily new. There remains economic challenges and misuse of funds, continued difficulties of prosecuting bad actors, and increased workloads and a lack of expertise allowing some fraud and other illicit issues to slip through the cracks.

However, government officials today are focused on one emerging threat above all others: new technologies such as AI, according to the report. In fact, when asked about the top ways they see FWA increasing within the next two years, new technologies and AI were cited by nearly one-in-five respondents. Further, an additional 13% cited new opportunities for misconduct, such as those aided by the internet and other technological innovations. When asked specifically what it is about AI that strikes fear in government officials’ hearts, many cited the ease that AI potentially can bring to many illegal activities, especially its ability to ensnare victims. “AI makes it easier to go trawling,” answered one respondent. “Technology is improving, giving people more ways to communicate with the public without being identified,” responded another.

When asked specifically what it is about AI that strikes fear in government officials’ hearts, many cited the ease that AI potentially can bring to many illegal activities, especially its ability to ensnare victims. “AI makes it easier to go trawling,” answered one respondent. “Technology is improving, giving people more ways to communicate with the public without being identified,” responded another.

In addition, a number of respondents pointed to the impact AI technologies will have on current cybersecurity protocols. One respondent said they are most worried that “AI will be exploited to bypass security features and firewalls,” while another said they expect an “increase in cyber-fraud and other frauds due to AI technology.” This fear is particularly acute as technologies such as quantum computing are emerging that could make breaking security structures even more possible in the future, unless additional steps are taken to fight these bad actors.

AI’s role in combating FWA

At the same time, however, AI does not only strike fear into government officials’ hearts. They also view it as an opportunity — albeit one that many are still weighing the pros and cons of undertaking.

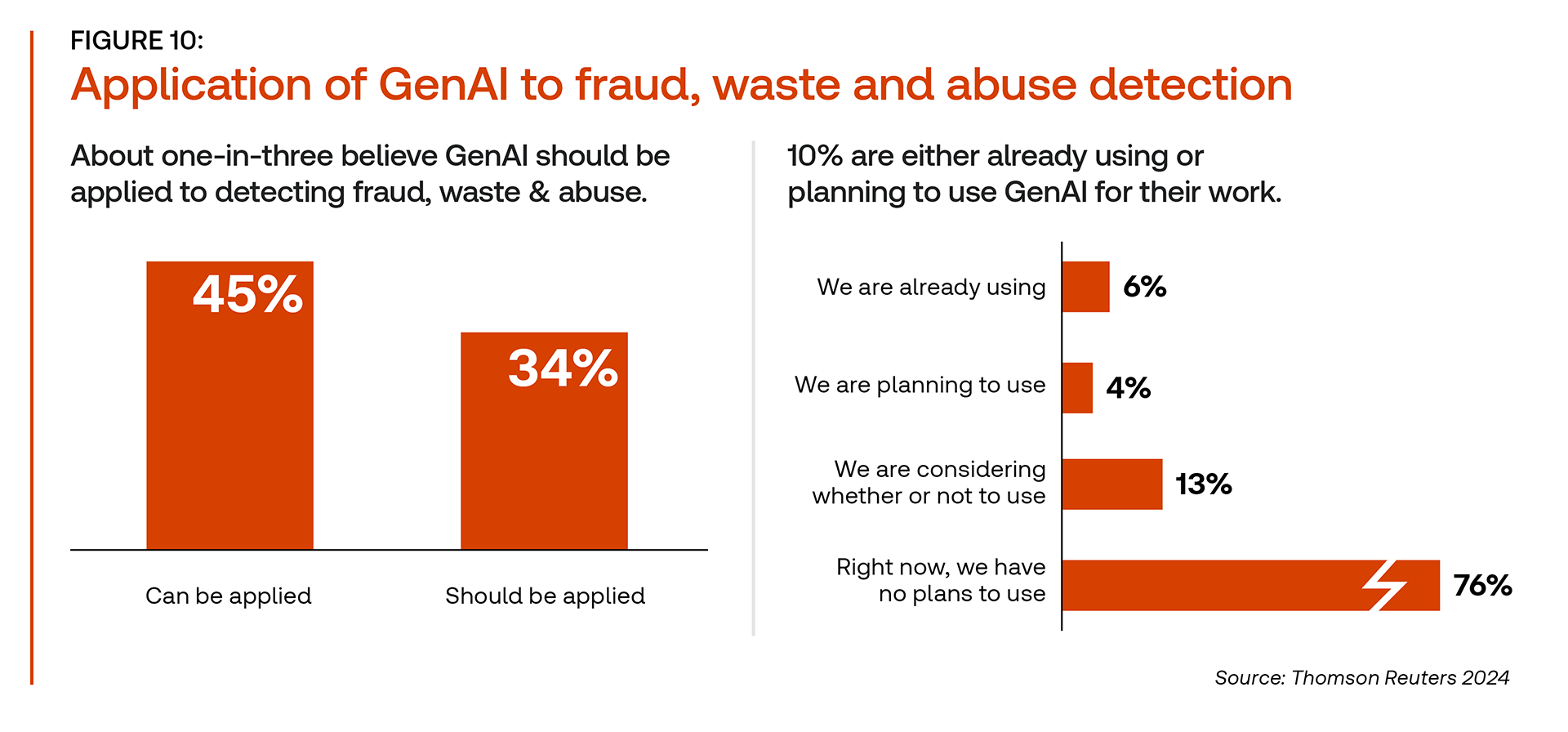

When asked whether GenAI in particular can be applied to FWA work, nearly half (45%) said they believed that GenAI can play an active role. Similarly, when asked if it should be applied to FWA — meaning respondents not only see a use case but believe that such a use case should be acted upon — about one-third of respondents answered affirmatively, while an additional 55% said they did not know. Just 11% said they believed GenAI should not be applied to FWA work.

However, just because they view the future of GenAI in FWA work positively does not necessarily mean that government officials are rushing to adopt the technology. In fact, just 6% said they are already using GenAI for FWA work, while an additional 4% said they are making plans for its use. More than three-quarters of respondents said they have no plans to use GenAI for these purposes right now, adding that they are willing to wait until use cases become clearer and budgets open up.

By and large, those government officials who conduct fraud detection are doing so most often through tried-and-true methods. Nearly two-thirds, for instance, report cross-referencing databases of social benefit recipients within the state (for example, by using prison records, death records, unemployment insurance beneficiary records, etc.); and 85% said their department or agency has a hotline or other processes in place for citizens or employees to lodge a FWA tip or complaint directly. Just 19% are trying new technologies of any type, a figure that is actually down nine percentage points from the year before.

Of course, these methods of fraud, waste & abuse detection are not mutually exclusive. And particularly in the current environment, government officials may be well-served by combining these tried-and-true methods with newer AI-driven technology tools. The technology has already been used effectively for actions such as Suspicious Activity Report (SAR) filings and day-to-day supervision at the European Central Bank.

It’s no surprise that according to the report, the top two challenges facing government FWA functions are handling an increased volume of workflow, and limited resources and budget. And it is also clear that professionals charged with tackling fraud, waste & abuse see GenAI as a critical way to address these challenges in the future — and the time for adoption may not be far away.

You can access the Thomson Reuters Institute’s recent 2024 Government Fraud, Waste & Abuse Report, here.