In a high-stakes industry like legal practice, the accuracy and relevance of legal resources is non-negotiable. Thomson Reuters makes sure legal professionals are at the heart of everything we do – from writing and maintaining content to developing AI that’s designed with lawyers in mind. We know that specialization matters, which is why our AI is tested and refined not just by engineers, but actual attorneys with real practice experience.

Why legal input is crucial

The law is a complex universe – each case requiring careful and thorough research, each document requiring specific language to ensure its validity and compliance. When lawyers leverage legal technology to find answers, assist with drafting, or carry out multi-step workflows, they need to feel confident that they’re not missing something. Many of the tasks we expect legal AI to perform involve a nuanced understanding of the law and its application, which is often anything but black-and-white. Without meticulous testing and grading by legal experts, the door is left open for costly mistakes. Take the Vals Legal AI Report for example. When testing various legal AI platforms against a human lawyer, 3 out of 4 platforms could not identify and extract contract language relating to a specified clause. The one that did? Thomson Reuters CoCounsel.

What this looks like in practice

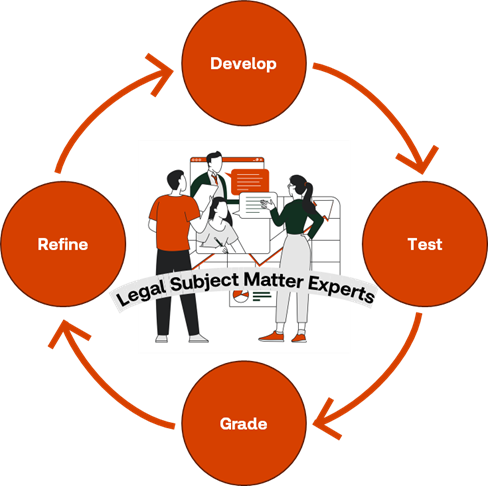

Thomson Reuters is deeply committed to using legal and subject matter experts when testing and grading the output of our legal AI.

Thomson Reuters is deeply committed to using legal and subject matter experts when testing and grading the output of our legal AI.

For our developers working on AI for legal research, that means partnering with our licensed Westlaw attorney editors. Our editors help identify data that should be referenced for each skill, create standards by which to test the AI, conduct the AI testing, and evaluate and grade the output. Our attorney editors have conducted hundreds of evaluation sessions and graded thousands of responses to ensure that our AI-enhanced tools like CoCounsel meet accuracy standards, adhere to source documents, and apply logical reasoning that accounts for nuances in the law.

Similarly, our Practical Law attorney editors are integral in our development and improvement of agentic workflow capabilities in CoCounsel and beyond. Not only do our subject-matter experts create gold-data tests for our new agentic capabilities but also improve how we conduct human grading and output evaluation for autonomously performed complex, multi-step legal tasks.

CoCounsel’s outputs must meet complex criteria like factual accuracy and logical consistency, which aren’t easily judged with simple true-or-false tests. Evaluating legal content is also often subjective – some users prefer detailed summaries, others concise ones—making automated assessments challenging. Enter our Trust Team. The Trust Team is a group of experienced legal professionals with backgrounds ranging from in-house counsel to law firms of every size. They create tests that represent actual work attorneys need to complete, set up the gold-standard response, and then run these tests against CoCounsel’s skills for automated evaluation. Using this process, CoCounsel’s skills have undergone over 1,000,000 tests, and any output that does not meet the attorneys’ standards is reviewed manually to ensure reliability.

Bringing it all together

The recent development and release of the CoCounsel workflow Draft a Discovery Request highlights the importance of collaboration between tech and legal expertise. It’s not enough to build an AI tool with the hope it might be useful to attorneys. You need actual lawyers to tell you what a specific workflow looks like now, what their pain points are, and how AI can alleviate those challenges.

When creating Draft a Discovery Request, the CoCounsel team relied on three separate teams of legal subject matter experts to guide the build: seven litigators from the Trust Team to create consistent automated and manual testing during development, an AI editorial team to provide process and grading input, and 13 Practical Law editors with litigation experience ranging from labor and employment to intellectual property law to review formatting, style, and the substantive output. During the testing and review process, the Practical Law editors noted that the skill output omitted several key definitions and neglected to include requests relating to interstate commerce issues and additional parties connected to the suit. Any one of these errors could result in confusion or an objection from opposing counsel, but careful editorial review allowed our CoCounsel team to adjust the output requirements and account for the necessary legal considerations and best practices. Without the review of the 20 legal subject matter experts working on this skill, these issues would not have been flagged because they were not technical errors, but substantive or procedural ones. Legal expertise and guidance were critical to verifying the accuracy of the workflow and ensuring this is AI lawyers will find useful and trustworthy.

AI that thinks like a lawyer

Having attorneys and legal subject matter experts provide guidance, develop test criteria, and evaluate legal AI is critical to providing a truly valuable tool for legal professionals. You can’t have AI that thinks like a lawyer if no lawyers are involved in the process. That’s why CoCounsel Legal is the AI lawyers swear by.