The next phase of AI leadership depends less on building models and more on architecting reusable, machine-readable intelligence

Key takeaways:

-

-

-

Shifting from engineering to architecture — Focusing solely on building better AI models and engineering solutions leads to isolated, non-reusable outputs. Instead, organizations should build AI into the broader enterprise, emphasizing reusable, machine-readable intelligence that integrates with business operations and data structures.

-

Regulation as opportunity for reusability and efficiency — Regulatory frameworks are not just compliance burdens; they also are catalysts for sustainable AI. By mandating standardized, machine-readable data, these regulations force organizations to design systems for reuse, enabling operational efficiency and scalable innovation.

-

Reusable enterprise is the path to sustainable reinvention — The future of AI leadership lies in building adaptable, reusable data and AI infrastructures. When standardized data, AI models, and regulatory compliance reinforce each other, organizations can continuously reinvent themselves, support multiple business outcomes from the same information assets, and achieve compound returns on their investments.

-

-

Across industries, executives are confronting an uncomfortable truth: AI projects are delivering outputs, not outcomes.

For years, organizations have poured time and capital into the mechanics of AI — the algorithms, the computation power, the data pipelines, and the engineering teams to support them. Yet results remain uneven. Models keep getting larger, but lasting, reusable business value hasn’t followed.

The problem isn’t the math, it’s the mindset.

Too many enterprises have tried to engineer AI into existence instead of architecting it into the enterprise. The focus has been on perfecting models, not integrating them into the broader data and operational fabric of the business. The assumption has been that a technically superior model naturally creates a competitive edge. It doesn’t.

Without consistent governance, shared definitions, and reusable data structures, every AI initiative becomes its own isolated experiment. One line of business builds a credit-risk model. Another develops an environmental, social, and governance (ESG) classifier. A third deploys a generative assistant for customer support. Each team moves fast, but none build on each other’s work. The result is a proliferation of proofs of concept — impressive on paper but disconnected in practice.

For years, organizations have poured time and capital into the mechanics of AI — the algorithms, the computation power, the data pipelines, and the engineering teams to support them. Yet results remain uneven.

And this fragmentation carries a financial cost. Every new model adds complexity — new pipelines, new monitoring requirements, and additional governance checkpoints. These systems rarely scale together, and as integration demands grow, executives find themselves in a paradox: Make massive investments in AI infrastructure yet see declining agility and uncertain ROI.

The AI engineering mindset has optimized the structural parts, not the whole when it comes to a production solution set. In general, it has produced models that predict, but not organizations that learn.

In short, the AI engineering mindset has reached its limit — a sign that AI is entering sustainable growth cycles. Many leaders are beginning to realize that they don’t need more AI engineers, rather they need system designers who can embed intelligence into reusable business frameworks — all while navigating a regulatory environment increasingly defined by machine-readable data standards such as the Financial Data Transparency Act (FDTA) and Standard Business Reporting (SBR).

Regulation as catalyst, not constraint

At first glance, FDTA and SBR may appear to be just another layer of regulatory complexity. They are not. In fact, they represent one of the most powerful architectural opportunities available to organizations today.

By mandating machine-readable data standards, these frameworks force companies to design for reuse. They turn what once felt like a compliance exercise into an infrastructure strategy — one that connects regulatory requirements directly to operational efficiency. Build once. Reuse often.

For decades, compliance has been treated as a cost of doing business. Under FDTA and SBR, it can become the scaffolding of reinvention. Machine-readable, standardized data provides the foundation for models that are verifiable, shareable, and reusable across domains. Reporting ceases to be an afterthought and becomes a living data layer that fuels forecasting, stress testing, and product innovation.

When viewed through this lens, regulation isn’t an obstacle; it’s the blueprint for sustainable AI. It forces clarity, consistency, and interoperability — qualities every enterprise says it wants, but few achieve voluntarily. Regulation may finally deliver what AI engineering alone could not: The discipline of reusability.

From proofs of concept to proofs of architecture

For most organizations, AI success has been measured by the number of proofs of concept completed, or how fast a model moves into production. However, the real test of maturity isn’t how many experiments you run, it’s how easily those experiments can be scaled, reused, or extended.

That’s where the next evolution lies. We are now shifting from proofs of concept to proofs of architecture. And that means the question leaders should be asking isn’t, “Did it work once?” but “Can it work again, and with half the effort?” Only when a single domain’s data can serve multiple regulatory, compliance, and analytical purposes, can the enterprise start to gain compound returns on its information assets.

When viewed through this lens, regulation isn’t an obstacle; it’s the blueprint for sustainable AI. It forces clarity, consistency, and interoperability — qualities every enterprise says it wants, but few achieve voluntarily.

This approach turns data from a static resource into a dynamic capability. AI is no longer something you deploy; rather, it’s something you design for reuse.

Engineering adaptability

Organizations that embrace this shift are learning to engineer adaptability rather than one-off innovation. Their data and AI systems act like interchangeable components, each capable of supporting new regulations, mergers, or market disruptions without starting from scratch.

Some industry examples of this development include:

-

-

- Financial services — Stress-testing data used for regulatory compliance can also inform pricing analytics and liquidity simulations, reducing cycle time between audit and strategy.

- Healthcare — Patient outcome models built for quality reporting can be reused to predict staffing needs or optimize clinical supply chains, extending beyond compliance and into operations.

- Legal and compliance sectors — AI used for document classification under discovery protocols can be repurposed for internal policy audits or ESG disclosure mapping, turning regulatory data into a strategic asset.

- Manufacturing and supply chain — Sensor and maintenance data initially used for safety reporting can drive predictive production planning and carbon-emission forecasting under emerging sustainability standards.

- Public sector and critical infrastructure — Data collected for transparency and open-data mandates can be reused to model risk exposure across utilities, cybersecurity, and climate resilience programs.

-

In each of these cases, the same information infrastructure supports different outcomes. That’s the hallmark of a reusable enterprise.

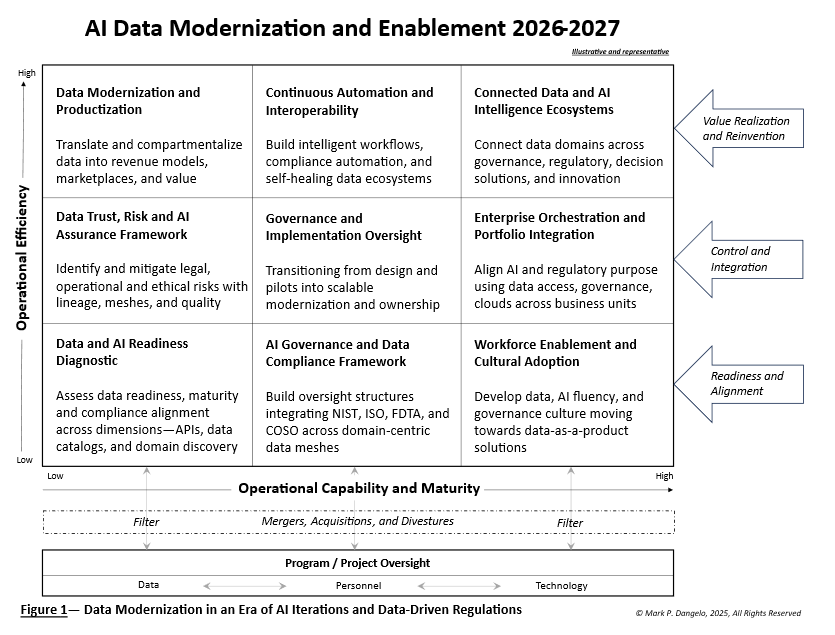

The above chart’s interconnected components illustrate how standardized data, reusable AI, and regulatory compliance can reinforce one another to create a continuous cycle of enterprise reinvention — standardized data supports reusable AI, which in turn enhances reporting and regulatory alignment. The result is a virtuous loop that replaces isolated projects with scalable, data-driven reinvention.

A call to reusable leadership

The next phase of digital leadership won’t be defined by how sophisticated a company’s models are, but instead by how seamlessly those models integrate into decision-making.

The leaders who succeed will be those who align AI investments with evolving regulatory and data standards. Their organizations will speak a common data language in which AI, compliance, and analytics operate within a shared architectural framework.

As FDTA and SBR converge globally, the line between compliance and competitiveness will blur. What once felt like regulatory overhead will become the foundation of reusable intelligence. Reinvention, in this sense, isn’t a campaign or initiative — it’s a discipline. This is not AI as a project; it’s AI as infrastructure and the architecture of continuous reinvention.

For executives navigating 2026’s convergence of regulation, consolidation, and automation, the difference between thriving and merely surviving will depend on whether they can build organizations that learn, adapt, and continuously reinvent themselves through data.

You can find more blog posts by this author here