As the uses of generative AI become more commonplace, the reality of assessing and auditing the technology's performance, capabilities, and potential biases becomes even more important

In under 10 months, the adoption of generative artificial intelligence (AI) has shattered records across consumer and corporate demographics. Every week, dozens of complimentary product suites and competitive solutions are introduced as AI investments in the last 12 months rapidly reach more than $100 billion. Yet for all the benefits and intrigue, we already know that AI systems today are not infallible — they make mistakes and take liberties with outputs, make up references, and even fudge data gathering.

Despite all the excitement driving adoption and expansion, however, these solutions are black boxes of capabilities. The direct, negative customer impacts of a chatbot gone astray, the impacts of a biased machine-learning (ML) solution disadvantaging a demographic, the brand damage of errors and ensembled AI subsequently cascading outcomes across systems, and the legal and regulatory impacts regarding the difficulty of auditing grows with every introduced and cross-linked AI system. It will get better — but only with oversight and adaptive iterations.

To make the situation more challenging, the skills required to audit AI individual and cross-linked systems are emerging and scarce, leading to a patchwork quilt of capabilities. For auditors and compliance personnel, the journey of AI transparency begins not with technology, but with a clear understanding of the goals and objectives of the AI solution, its touchpoints and control limits, and finally its data and technology ecosystem.

The situational reality of assessing AI

Regardless of the industry, business leaders are captivated by AI and generative AI in particular, and they are investing heavily to secure their share of a five-year $5.5 trillion to $7 trillion global GDP expansion directly attributable to these nascent technologies. However, the implications of adopting various AI and ML solutions and methods introduce into domain auditing teams multiple levels of complexities which less than 18 months ago were just conference topics and not frontline demands.

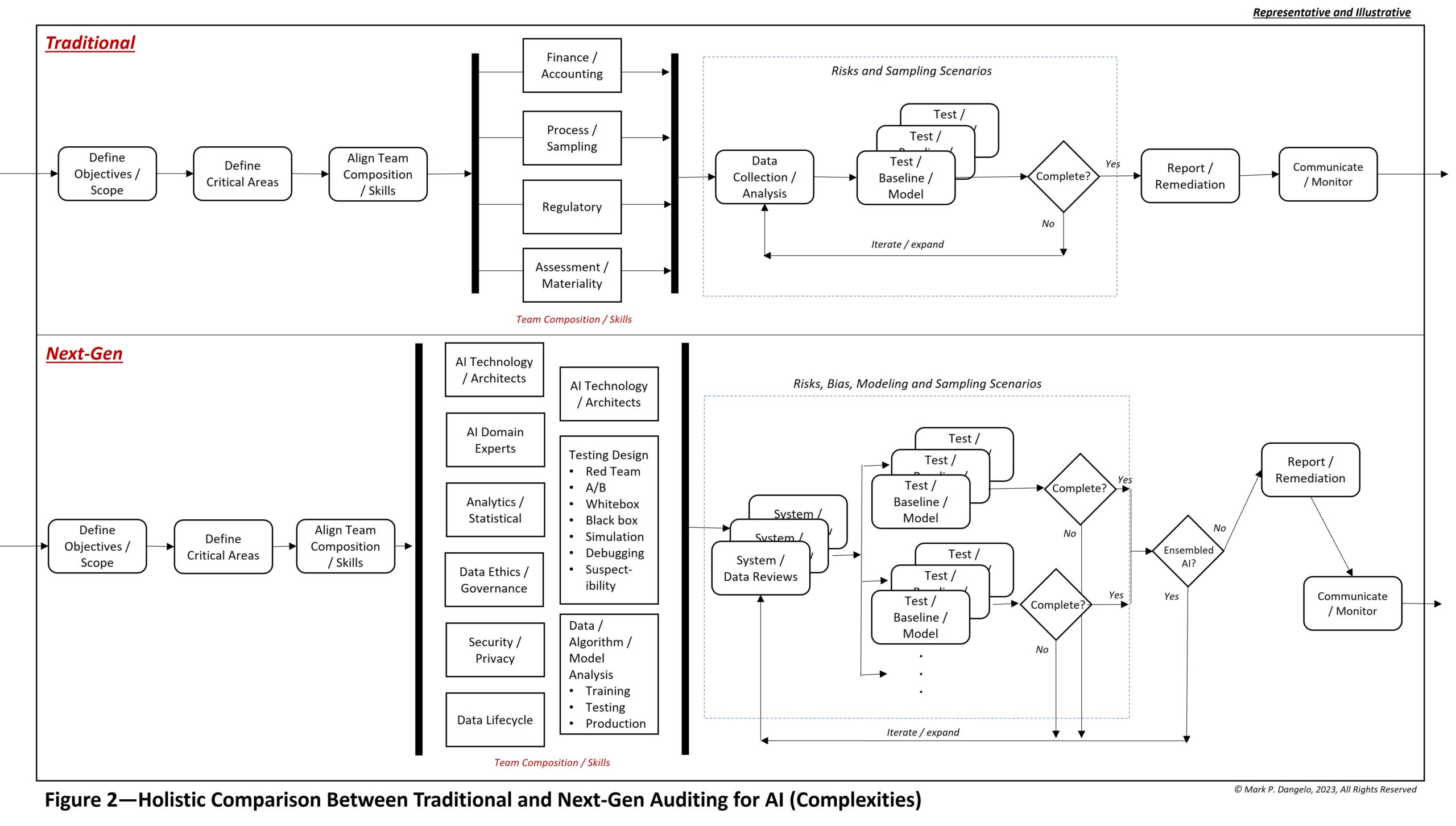

As illustrated below, what once was known and encased at audit process business-as-usual is now represented by additional demands and skill sets that are highly segmented. Making the situation more challenging is the lack of foundational interest in auditing from college graduates, and the significant exodus of talent experienced by public accounting firms.

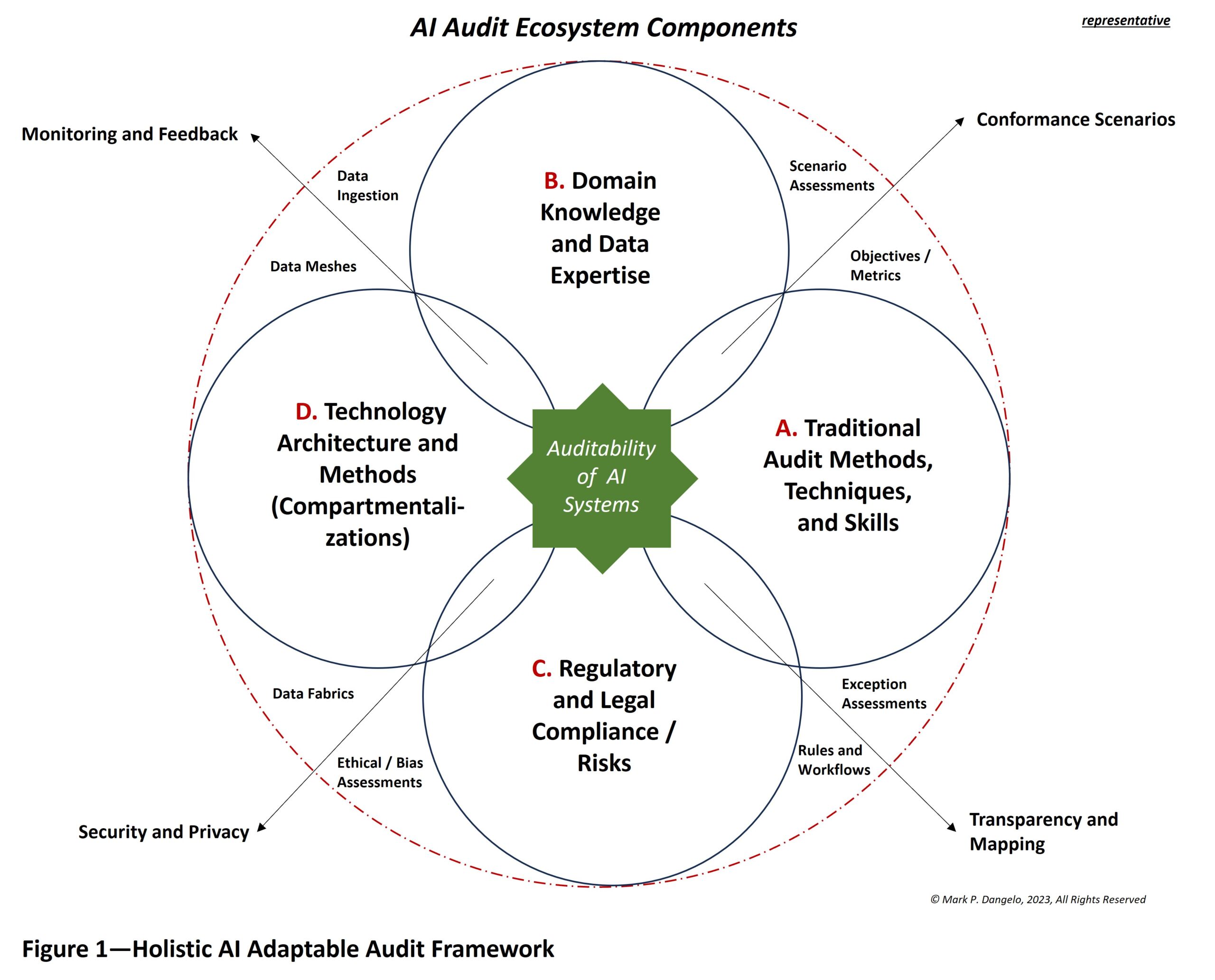

Across categories A through D, the granular taxonomies encased within the ecosystem showcase the different and potential solutions that traditionally reside outside the core competencies of professionally trained audit teams. The intersection between these categories represents common subject matter.

This graphic implicitly addresses a fundamental challenge with the rapid adoption of innovative AI technologies — where the risks are hidden within not just the algorithms, but within the data; their outputs across silos; the layers of decision criteria; and most importantly, the legal and regulatory exposures of unintended consequences.

For audit staff steeped in traditional approaches, data sampling, and process assessments, the science of AI encased within and across domain systems ushers in a step-function increase in required capability. These upskill demands must either be addressed within the audit team assembled, or matrixed to highly specialized staff, which can efficiently move from sample-based assessment to full data-discovery using audit-defined technologies and tools specifically developed for AI audits.

Next-gen demands: “Do no injustice or harm”

For internal or external auditors, the ability to assemble the forensic skills to analyze and assess AI and its conformance to required standards places the audit teams in unfamiliar situations. Teams targeting AI solutions require advanced knowledge of data and computer sciences, machine and deep learning, and the potential for 100% sampling including algorithmic training, testing, and production data. Beyond the financial and domain knowledge of traditional approaches, data source ingestion feeds AI decisions, embedded workflows, operational rules, and decision alternatives that are spread across disparate systems leading to complex, opaque interdependencies.

To illustrate this shift of demands and the cascading impacts of skills and approaches, the graphic below represents the activity, team, and iterative approaches comparing the traditional mindsets against adaptive, next- gen AI capabilities. The roles and responsibilities for staff analyzing and assessing AI in all its potential forms, the evaluation playbooks, along with balances and controls are all in the process of a permanent shift of features and functions.

Further, this graphic highlights the sampling differences created by layers of forensically critical AI subcomponents that merge to deliver decisions. As AI grows in capability, these layers must be independently verified and validated before the aggregated outputs can be assessed for conformance and remediation.

Think big, start small

Indeed, linking hope to hype is why multiform AI will dominate 2024 budgets, which before 2023 was relegated to sophisticated technology firms or academic institutions. Industry demands are outpacing academic theory, leaving a void of capability and science when it comes to common forensic analysis and assessment of domain defined AI decision making. Industry leaders, AI scientists, and accounting practitioners are rushing to address the voids of methods, skills, and regulatory implications; however, what can audit and industry institutions do to ensure that their prized, efficient AI investments continue to advance ethically, accurately, and with transparency?

To begin with, any initial due diligence checklist for AI oversight minimally includes:

-

-

- robust engagement planning, scope, and objectives;

- access to artifacts including code, algorithms, and large language model designs;

- skill set identification and staffing matrices;

- data ingestion, sources, and uses (including training, testing, and production data);

- security, ethical digital sourcing and outputs, and privacy;

- regulatory compliance and governance;

- data-decision transparency and results; and

- controls, monitoring processes.

-

The above list is non-specific and domain-agnostic and should only be used to seed more specific plans of action and audit design. The promise of precision, domain application efficiencies, and correlated insights of data-driven AI solutions are here to stay.

Moving forward, AI will not revert back to the edge of computer science. The mainstreaming of AI is now a freight train with momentum and purpose. For AI to improve without harm, industry and audit leaders cannot hope to stand in front of the barreling locomotive waiting for academics and politicians to study the path forward.

Industry and audit leaders must break from the pack and embrace the emerging skills needed for AI oversight. Those that fail to address AI’s cascading advancements, flaws, and complexities of design will likely find their organizations facing legal, regulatory, and investor scrutiny for a failure to anticipate and address advanced data-driven controls and guidelines.