As the data and decision models of yesterday begin to show decreased efficacy, the evolving organization of tomorrow needs more and more data that is actively governed, reusable, and componentized

Across the legal, regulatory, tax, risk, and financial services industries, the unexpected economic swings and unintended consequences unleashed by the upheaval of decades of free-trade models requires a rethinking of the core. Specifically, the data and decision models that once shepherded profitability in the past now show a significant erosion in efficacy for the future, due mostly to the expansion of granular AI capabilities.

The challenge for many organizational leaders now is that given the continued industry and market volatility, how can they best determine what data directions to take, what investments to make, and most importantly, what transformation skills will be needed to deliver services and compete successfully.

As this year began, the projection of forecasting models suggested that a larger scale of operations, robust mergers and acquisitions, and efficient, fast-cycle innovations — such as AI, generative AI, retrieval-augmented generation, and now of course, agentic AI — would be necessary to capitalize on economic growth, amid an anticipated dismantling of burdensome regulatory oversight.

The current economic rebalancing has fully exposed inherent data weaknesses, and calls into question the measurements, requirements, and design approaches to data success that were once considered advanced.

However, the new reality unveiling itself has shocked industry leaders. Economic contraction, supply chain implosions, cascading layoffs, opaque risks, and a withdrawal of investor guidance are breaking more than operating models — they are destroying the value of the traditional infrastructure that underpins insights and efficiencies. Additionally, the transformation of core principles and practices are hindered by decades of technological debt, and most recently, functionally limited AI solutions.

The crumbing core

The shock and awe now disrupting traditional markets challenges the industry cloud-defined growth models that were introduced nearly two decades ago. The core models of operation and system ideations underpinned by core axioms of data management are no longer providing adequate operating efficiencies and then benchmarked ROI.

Indeed, the current economic rebalancing has fully exposed inherent data weaknesses, and calls into question the measurements, requirements, and design approaches to data success that were once considered advanced.

Whereas numerous, sophisticated data solutions are now commercially available — meshes, fabrics, weaves, governance, metadata, security, and privacy — they quickly become expensive, burdensome IT white elephants. Even within agile (prototyping, pilots, and production), methods of defining cloud or on-premises systems can become obsolete quickly across industries drowning in event data. When applied to the core of traditional design solutions, it is now apparent that transformational leaders have been asking the wrong initial questions.

They ask, “What is the product? What is the insight? What is the platform we need to build?” However, the more practical, simpler, and impactful question with AI is: “What are we building on?” Analogous to building a house, what is the data foundation that will serve transactional, informational, and AI solutions across use cases and epic stories?

Data velocity, volume, value, variety, and veracity — known as the five Vs of data — have permanently altered organizations’ core infrastructures and future demands. No longer defined by products or services, core capabilities are based instead on vertical data domains, fabrics, flows, reusability, and data architectures that are expressed in use cases which identify needs across horizontal corporate functions of legal, compliance, tax, audit, and risk.

Why it matters

As organizations continue to rapidly evaluate and adopt AI-enabled solutions, it is data that increasingly becomes critical for day-to-day delivery. Data has become part of the organization’s infrastructure and represents a set of governed, reusable, and interoperable data domains that powers operations, analytics, and now iterative AI applications.

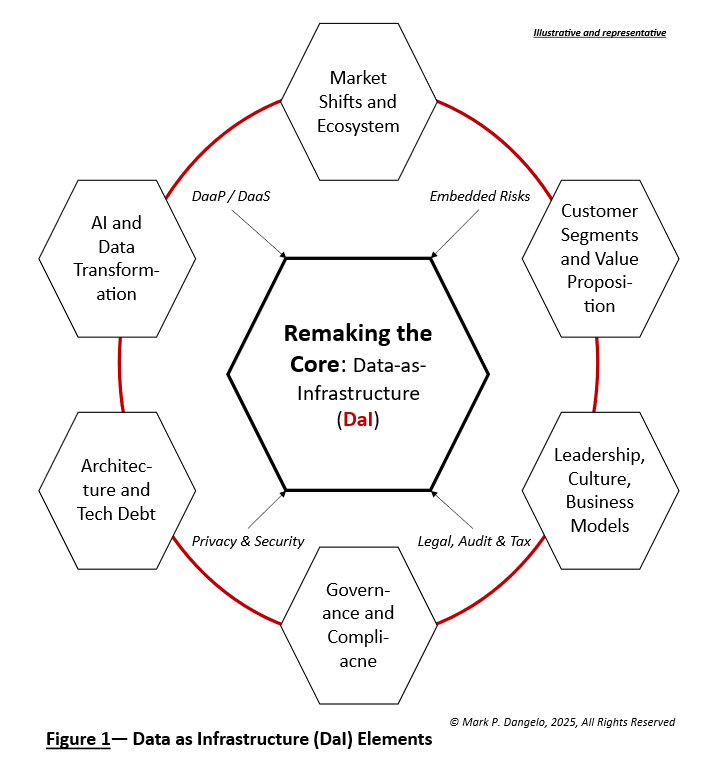

Unlike traditional approaches creating structured schemas, or data lakes full of unstructured streams, adopting a new business core by using data as infrastructure (DaI) delivers an orchestrated architecture that ensures reuse, on-demand access, and governance. Of critical importance, DaI is not another tool, data warehouse, or API. As illustrated in Figure 1 above, the evolving organization of tomorrow needs more and more data that is actively governed, reusable, and componentized.

Whereas prior IT data oversight initiatives were overly complex, expensive, and centralized, while returning limited value, DaI represents the expansion of data-as-a-service and data-as-a-product by fusing modern data architectures, AI, and strategic transformations that are designed to reshape core fundamentals. Fueled by business requirements, use cases, and epic stories needing traceability, DaI serves to deliver adaptable regulatory compliance, auditability, risk predictions, and customer trust against compartmentalized data designs.

The DaI compartmentalization approach means it is like a step function, iterative, compounded, and capital-lite, when contrasted against large commercial-off-the-shelf solutions that necessitate outsized investments for limited returns.

What’s important and imperative

As AI reshapes processes, interactions, and outcomes, the operational agility of data foundations determines innovative and organizational relevance. This means that legacy thinking and methods will face a harsh reality — the existing data foundations are too brittle, too segmented, and too costly to support future demands and interoperability.

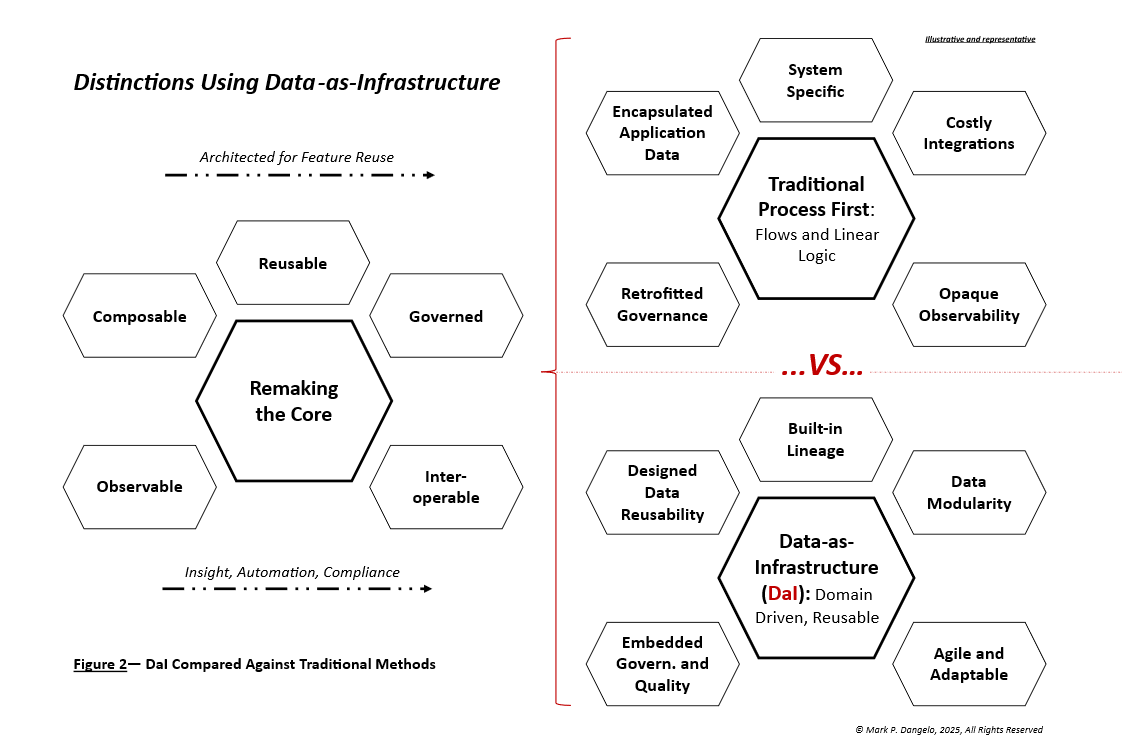

Rethinking the core of the organization now demands a re-purposing of how data is used and reused, compartmentalized, structured, governed, and embedded within systems across the enterprise. Figure 2 illustrates the distinctions between traditional process-first approaches and DaI across five taxonomy traits of remaking the core — observable, governed, composable, reusable, and interoperable.

Rather than lifting-and-shifting databases and context to alternative data ecosystems, DaI maps acquired data into shared infrastructure layers. This layering recognizes legal separation of entities, independent domain governance, federated AI data demands, and regulatory compliance within a common architecture that can satisfy diverse constituencies. For implementing DaI, the challenge is not the rationale, benefits, or tightly coupled implications, rather, it is identifying the practical segmentation and methods necessary for continuous delivery.

Practical implementation

DaI is an evolution of an organization’s data products and services across AI and its operational implementations that ultimately creates a data infrastructure that is reliable, reusable, and repeatable. It’s a long-term approach and design that is implemented in short-term bursts, making enterprise agility economical against changing markets.

As noted, DaI requires a structured and cross-functional series of interconnected sprints that blends robust data architecture with outcome-driven milestones. Organizations need to begin with a strategic framework that encompasses existing baselines and tool sets. Then, they need to stand up reusable components — data isolation modules — that are self-contained and tied to domains. Finally, they should map out the orchestrations within the use cases and epic stories which can ensure observability and trustworthiness.

Seeking the DaI solution

When an organization’s data core is fragmented, lacks scale, is untrustworthy, and of poor quality, DaI is the iterative, positive transformational approach to create the kind of data architecture that delivers durability, practical governance, and innovative modernization. And instead of duplicating data systems, DaI maps key domains such as clients, contracts, orders, compliance, and legal into a shared data infrastructure.

The idea of DaI is purposedly designed to work organization-wide while delivering within a domain or department with data policies, access controls, and quality being built in and owned by localized data product teams.

Taken holistically, DaI stands up new business models that organizations need to rapidly scale both their operational and analytic systems while allowing them to compete in today’s marketplace. In the end, when organization leaders rethink their core data and infrastructure using DaI, everything else becomes possible.

You can find more blog posts by this author here