Reliable, scalable AI depends on reinventing the data core — not just upgrading technology — and using business-driven, reusable, and compliant data foundations

Key takeaways:

-

-

-

The real bottleneck for AI is the data core — AI is advancing rapidly, but most organizations’ data architectures, governance, and legacy assumptions can’t keep up. Without a repeatable, business-aligned data foundation, AI initiatives will struggle to scale and deliver reliable results.

-

AI success relies on explainable, traceable, and reusable data — For AI to be reliable and compliant, organizations must design data environments that emphasize lineage, semantics, and trust; and that means that compliance and auditability need to be built into the data core, not added on later.

-

Business should shift from tool-centric upgrades to business-driven, data-centric reinvention — Efforts focused only on modernizing tools or platforms miss the root issue: legacy data structures. Leaders must prioritize building a cohesive, reusable data core that aligns with business strategy.

-

-

This article is the first in a blog series exploring how organizations can reset and empower their data core.

Across boardrooms, regulatory briefings, and strategic off-sites, leaders are asking with growing urgency some variation of the same question: How do we make AI reliable, scalable, auditable, and economically defensible? The surprising answer is not in the AI technology, nor in the cloud stack, nor in another round of system upgrades.

It is in the data. Not the data we store, not the data we report, and not the data we move across our pipelines. It is in the data that we must now explain, contextualize, trace, validate, and reuse continuously as agentic AI becomes embedded in every workflow, every decision system, and every regulatory outcome.

The stark reality across industries then becomes what to do as AI matures faster than our data cores can support. For the first time, technology is not the bottleneck — architecture is, organizational assumptions are, and governance strategies are. More importantly, the lack of a repeatable, business-aligned data foundry has become the strategic inhibitor standing between today’s operations and tomorrow’s autonomy-ready enterprises.

The realities of 2026

As 2026 gets underway, the pressures of regulation, AI adoption, data lineage requirements, and cross-system consistency have converged into a single strategic reality: We can’t keep modernizing data at the edges. The data core itself must be reimaged and compartmentalized.

For leaders across highly regulated industries, the challenge is recognizing that our data architectures were never designed for the world we’re moving into. Historically, solutions were built for predictable siloed-data systems, linear programmatic processes, and dashboard reporting. Today’s demands are continuous, variable, cross-domain, and machine-interpreted and not bound by traditional methods and techniques of process efficiency and system adaptability. Tomorrow’s systems will be comprehensively trained by data. To properly frame these realities, leaders must understand:

-

-

- Agentic AI exposes weak data architecture immediately — Models may scale, but data debt does not. This is a new, priority constraint.

- Lineage, semantics, and trust scoring — not models — will determine enterprise readiness — AI will only be as reliable as the meaning and traceability of enterprise data.

- Compliance cannot be retrofitted; rather, it must be designed into the data core — Compliance no longer ends in reporting, it must exist upstream and be addressed continuously.

- Return on investment in AI is impossible without composable, modular, and reusable data products — Data that cannot be composed, traced, and made consistent cannot be automated.

- The bottleneck is not talent or tools, it is the absence of a data foundry — Without robust, industrial-grade data production, AI will remain fragmented and experimental.

-

By delivering a practical, business-first path integrated with a data-centric design, organizations enable reuse, compliance, and measurable ROI. AI is accelerating, but data readiness is not. This mismatch is where many transformation efforts die.

Agentic AI demands a data environment that simply does not exist with most legacy solutions. It requires decision-aligned semantics, federated trust scoring, cross-domain lineage, dynamic compliance overlays, and consistent interpretability. No model, no matter how advanced, can compensate for data environments that have been engineered for static reporting and linear process logic. We are entering a cycle of reinvention in which data becomes the organizing principle.

The business need, not the engineering myth

Executives are rightfully fatigued by transformation programs. They have seen modernization initiatives expand scope, escalate cost, and ultimately underdeliver. They have heard the promises of clean data, enterprise data platforms, microservices, cloud migration, and AI-readiness. However, when agentic AI begins interacting with these ecosystems, the fragility of the entire operation becomes instantly visible.

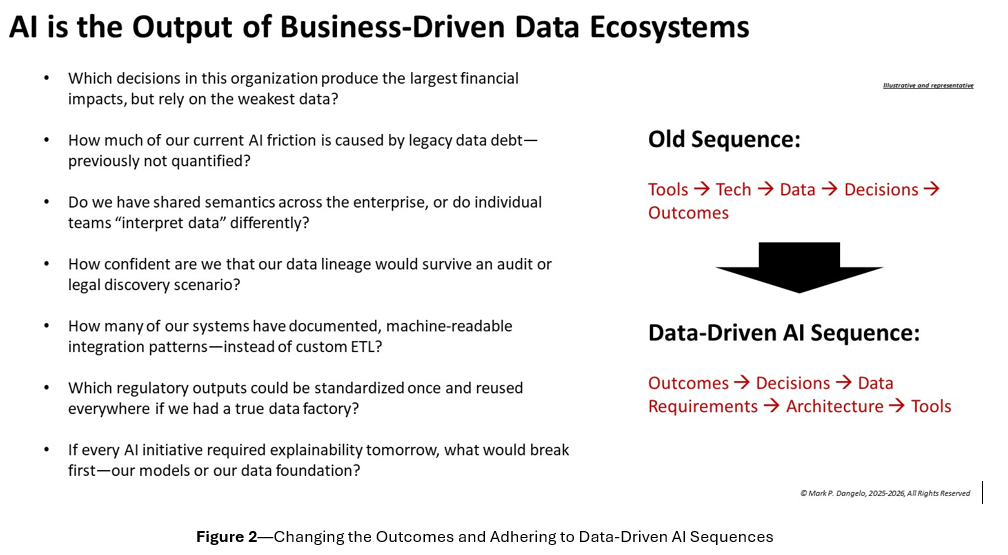

Why? Because most data modernization initiatives have been driven by tool-centric solutions rather than architecture-centric capabilities. Prior data governance is about oversight, not enablement and reuse, as is being demanded by emerging AI designs. Often, legacy methods kept their audit and lineage contained within siloed processes, bridging bridged them with replicated data warehouses, extract, transform, load systems (ETLs), and application programming interfaces (API) protocols.

However, this tool-centric, legacy-enabled approach is the problem. We keep optimizing the wrong layers, and we keep modernizing the components.

As a result, we too often see that AI pilots succeed, but enterprise scaling fails. Or, that regulatory reporting improves marginally, but compliance costs increase. Or M&A integrations appear straightforward, but post-close data convergence drags on for years.

The gap between ambition and reality

As a solution, a data foundry approach corrects that imbalance by formalizing the factory-grade patterns required to support agentic AI systems. It becomes the production line for reusable data products, compliant semantics, and decision-aligned datasets. It also eliminates reinvention by institutionalizing repeatable structures; and, most importantly, it restores business leadership over AI outcomes, rather than relegating decision logic to engineering workstreams and emerging technologies.

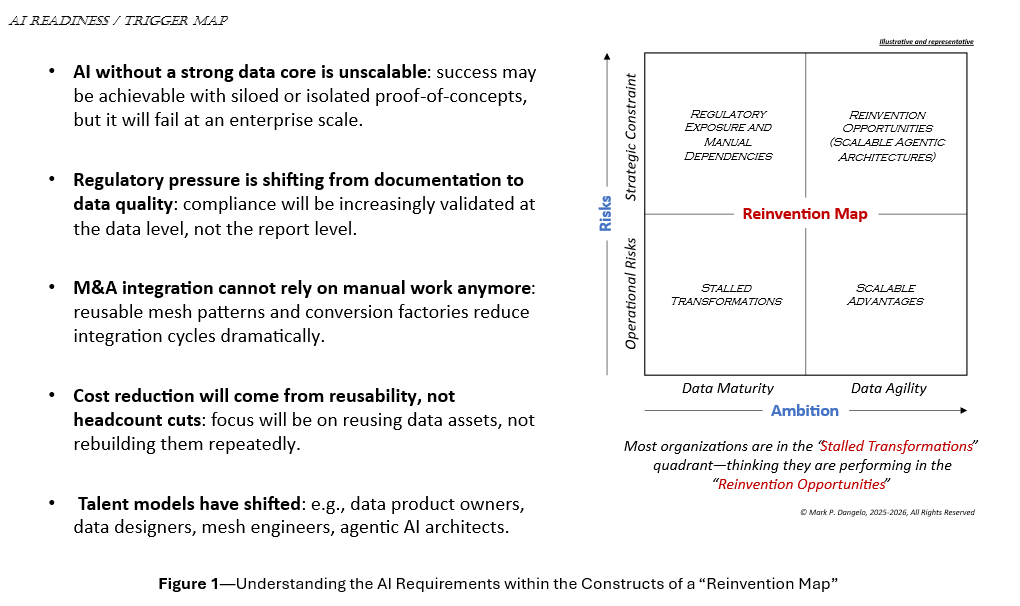

As illustrated below, AI requirements and realities need to be tempered with business demands, organizational risks, and data agility capabilities (including skill sets) to achieve realistic roadmaps of action — not strategic aspirations.

Today, the question isn’t whether organizations understand the importance of data, it’s whether leaders know how to build environments in which data becomes reusable, trustworthy, and ready for agentic AI. The issue, however, continues to be that our data cores — the architectural, operational, and standards ecosystems beneath all this — were not designed for continuous change.

Before they mobilize and execute against AI plans, business leaders need to answer the question: What business decisions are we trying to improve — and what data do these decisions actually requires today, and for tomorrow?

The organizations that will lead in the coming decade will do so not because they found the perfect technology stack, but because they built a reusable, continuously improving data foundation that can support AI, regulation, risk, and innovation simultaneously.

The question for leaders then becomes: Are we prepared to reinvent?

The work begins now — quietly, deliberately across the data core where tomorrow’s competitive advantages will be created. The chart below illustrates the business-driven AI elements that must be addressed, and how the old sequence of system provisioning must be replaced, beginning with outcomes and ending with engineered AI tools.

AI is an output — a capability that’s unlocked after the underlying data foundation becomes coherent, traceable, explainable, and aligned with business decisions. For leaders, the data core is no longer a back-office concern or one-off IT initiative. It is a strategic asset that can shape speed, resilience, and trust across the organization.

In the next post in this series, the author will explain how to architect an integrated data core, particularly through the AXTent architectural framework for regulated organizations. You can find more blog posts by this author here